Discover the groundbreaking research by XYZ Company on new cancer treatment using nanotechnology. Results show promising potential for more effective and targeted therapies.

Discover how Company X revolutionized the tech industry with its groundbreaking AI technology. Find out how their product has disrupted traditional business models and set new standards for innovation.

Discover how innovative startups are revolutionizing the tech industry with groundbreaking AI solutions. From autonomous vehicles to personalized healthcare, these companies are pushing the boundaries of what is possible.

Discover the groundbreaking collaboration between Tesla and SpaceX in developing innovative battery technology. This partnership aims to revolutionize energy storage and transportation industries.

Discover how Company X revolutionized the industry with their groundbreaking product, leading to a surge in profits and customer satisfaction. Learn about the innovative technology behind their success and how it is shaping the future of the market.

Discover how tech giants Apple and Google are teaming up to develop a contact tracing app for COVID-19. The app will use Bluetooth technology to track interactions and notify users of potential exposure.

Discover the latest breakthrough in AI technology with the unveiling of Tesla's new self-driving car. The revolutionary vehicle promises to redefine the future of transportation.

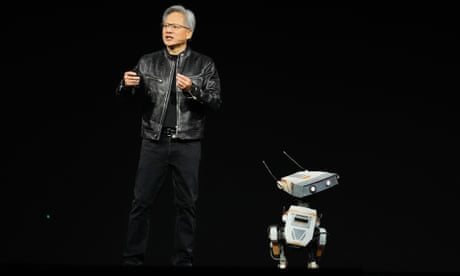

Discover the latest breakthrough in AI technology by leading companies. Explore how cutting-edge products are revolutionizing industries.

Discover how innovative tech startups are revolutionizing the healthcare industry with AI-driven solutions. From personalized medicine to remote patient monitoring, these companies are changing the game.

Discover how Company X revolutionized the tech industry with their groundbreaking AI technology, leading to a surge in market dominance. Learn about the unexpected partnership between Company Y and Company Z that resulted in the creation of a game-changing product set to disrupt the market.

Discover the groundbreaking research by Tesla on sustainable energy solutions. Learn how their innovative technologies are revolutionizing the automotive industry.

Discover how innovative startup XYZ is revolutionizing the healthcare industry with its groundbreaking AI technology. Learn how their product is streamlining processes and improving patient care.

Discover the groundbreaking collaboration between Tesla and SpaceX in developing new sustainable energy solutions. Explore the innovative technologies and bold strategies driving the future of clean transportation and space exploration.

Discover the latest breakthrough in AI technology with the launch of Tesla's self-driving cars. Learn how this innovation is revolutionizing the automotive industry.

Discover the latest breakthrough in AI technology by Google. Their new product promises to revolutionize the way we interact with machines.