AWS introduces Web Bot Auth, a solution for AI agents to browse the web securely. CAPTCHA friction reduced, allowing agents to verify their identities cryptographically with the new IETF protocol.

AI technology by MedCognetics on Women Cancer Screening Van in rural India provides low-cost, high-quality breast cancer screenings to thousands of women, connecting abnormal findings to expert doctors for further testing and treatment. MedCognetics' FDA-cleared AI systems, deployed on NVIDIA platforms, enhance digital mammography with image denoising and motion suppression, bridging the health...

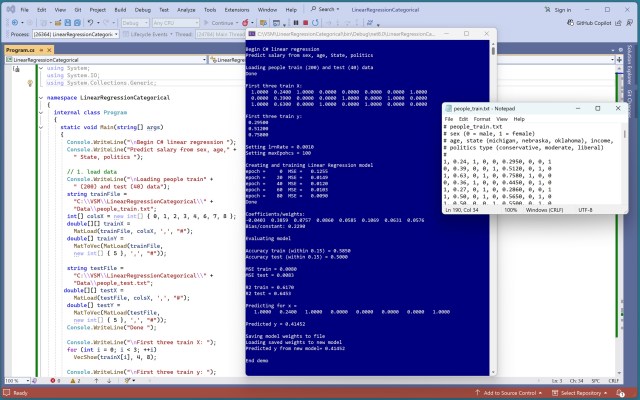

Implementing C# linear regression to predict salary from sex, age, State, politics using one-hot encoding and basic SGD. Achieved 91.5% training and 95.0% test accuracy with MSE of 0.000671.

Character.AI will ban users under 18 from chatting with its virtual companions in late November due to legal scrutiny over mental health concerns and a proposed bill to restrict minors' access to AI.

Microsoft reports earnings of $3.72 per share, surpassing concerns over AI spending. Deal with OpenAI boosts company value to over $4tn despite Azure and 365 outage.

NVIDIA partners with universities and cities nationwide to embed AI education and innovation, driving economic growth and workforce development. Initiatives like Utah's AI factory and Rancho Cordova's AI ecosystem aim to prepare the next generation for the global AI economy.

Emma Thompson expressed frustration with Microsoft's Copilot AI rewriting her scripts, highlighting her annoyance with AI prompts. The Oscar-winning actor shared her "intense irritation" with the growing presence of AI in writing during an interview with Stephen Colbert.

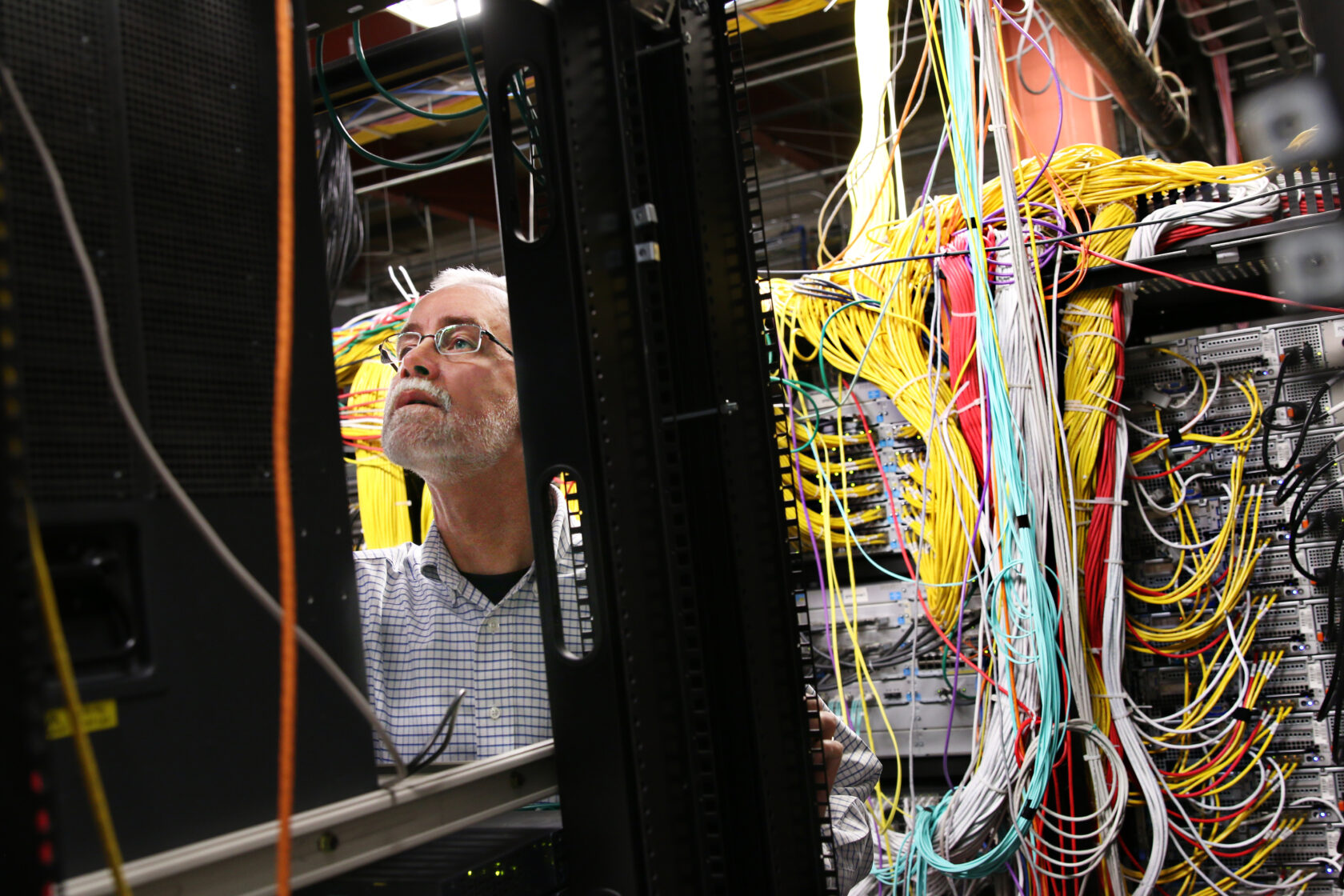

Alphabet beats estimates with $100bn revenue, plans multibillion-dollar AI investment in datacenters for cloud services. Shares rise as company increases capital expenditure guidance to $91-93bn for infrastructure supporting AI products.

Male Allies UK raises concerns about teenage boys' increasing reliance on hyper-personalised AI chatbots for therapy, companionship, and relationships. Survey shows over a third of boys in secondary schools considering AI friends, sparking worries about socialisation and boundary respect.

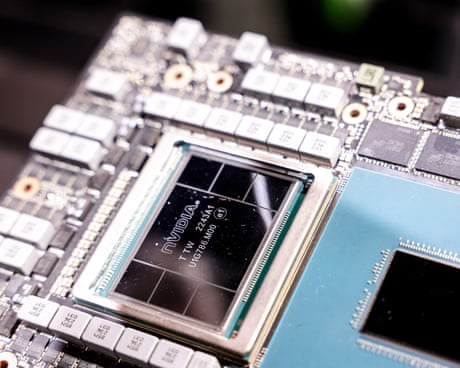

Nvidia surpasses $5tn valuation, leading AI industry boom. Outgrowing competitors, its value exceeds GDP of India, Japan, UK combined.

Nigel Newton of Bloomsbury believes AI can assist authors in overcoming writer's block with big brand names' authority. Labour's moral exhaustion after a short time in office raises questions about the party's fatigue levels.

Elon Musk launches Grokipedia, an AI-powered encyclopedia aligning with his right-wing views, sparking controversy over accuracy and transparency. Grokipedia claims to be "fact-checked" by Musk's AI chatbot, challenging the traditional human-authored model of Wikipedia.

AI-integrated browsers like ChatGPT Atlas are the next big thing after chatbots. Major players are now developing browsers with deep AI integrations for personalized online assistance.

Australian Federal Police will use AI to decode slang and emojis used by online crime networks targeting young girls, warns AFP commissioner Krissy Barrett. Efforts to crackdown on sadistic online exploitation and 'crimefluencers' are underway to protect vulnerable teens.

OpenAI, creator of ChatGPT, transitions to for-profit status for easier capital raising. Approval from Delaware AG marks end of legal saga.