Wall Street Journal's heatmaps show vaccines' impact on diseases in the US. Matplotlib's pcolormesh() recreates measles heatmap, showcasing data storytelling power.

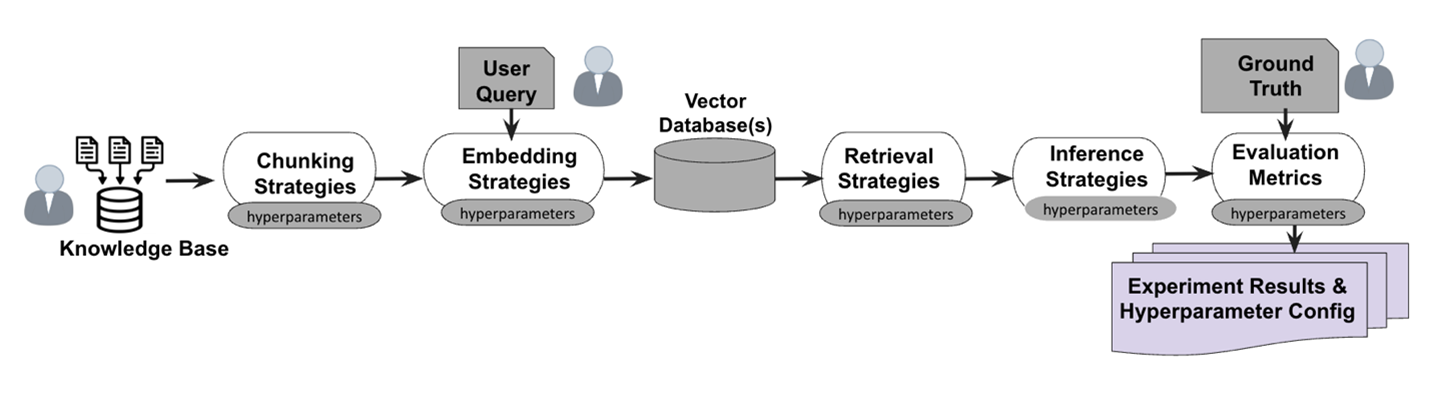

RAG and Fine-Tuning are two methods to enhance Large Language Models like ChatGPT and Gemini, enabling access to external knowledge sources for up-to-date information retrieval without retraining. RAG improves input by retrieving external data, while Fine-Tuning adapts the model to specific requirements, revolutionizing LLM capabilities for various applications.

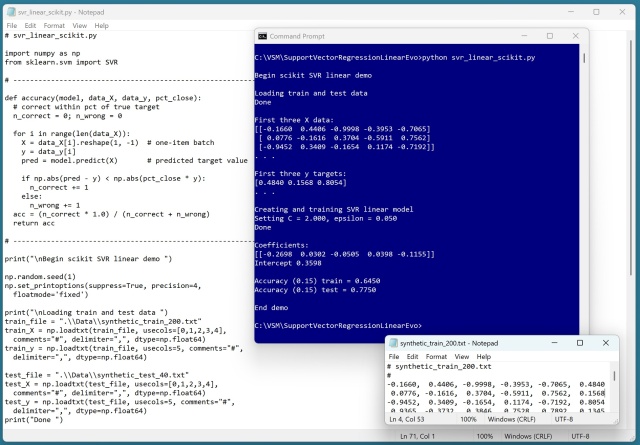

Support Vector Regression (SVR) with a linear kernel penalizes outliers more than close data points, controlled by C and epsilon parameters. SVR, while complex, yields similar results to plain linear regression, making it less practical for linear data.

FloTorch compared Amazon Nova models with OpenAI’s GPT-4o, finding Amazon Nova Pro faster and more cost-effective. Amazon Nova Micro and Amazon Nova Lite also outperformed GPT-4o-mini in accuracy and affordability.

GPT-3 sparked interest in Large Language Models (LLMs) like ChatGPT. Learn how LLMs process text through tokenization and neural networks.

Researchers are tackling spurious regression in time series analysis, a critical issue often overlooked, with real-world implications. Understanding this concept is vital for economists, data scientists, and analysts to avoid misleading conclusions in their models.

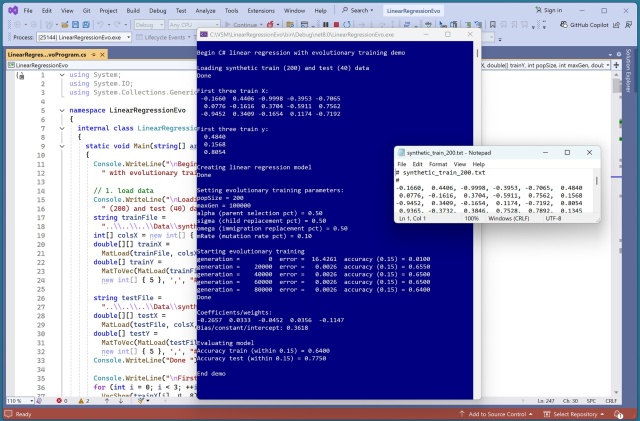

Demo showcases evolutionary training for linear regression using C#. Utilizes a neural network to generate synthetic data. Evolutionary algorithm outperforms traditional training methods in accuracy.

Data team structure is crucial for leveraging Data and AI effectively. Centralized teams can become bottlenecks without proper domain expertise integration.

Octus transforms credit analysis with AI-driven CreditAI chatbot, offering instant insights on thousands of companies. Octus migrated CreditAI to Amazon Bedrock, enhancing performance and scalability while maintaining zero downtime.

LettuceDetect, a lightweight hallucination detector for RAG pipelines, surpasses prior models, offering efficiency and open-source accessibility. Large Language Models face hallucination challenges, but LettuceDetect helps spot and address inaccuracies, enhancing reliability in critical domains.

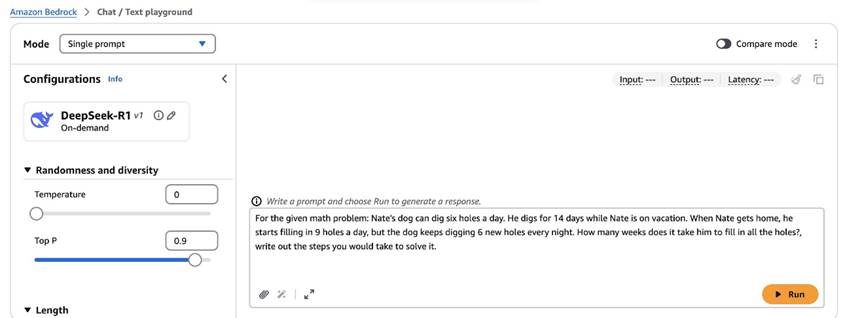

DeepSeek-R1 models on Amazon Bedrock Marketplace show impressive math benchmark performance. Optimize thinking models with prompt optimization on Amazon Bedrock for more succinct thinking traces.

Microsoft and Google unveil new AI models simulating video game worlds, with Microsoft's Muse tool promising to revolutionize game development by allowing designers to experiment with AI-generated gameplay videos based on real gameplay data from Ninja Theory's Bleeding Edge.

AI struggles to differentiate between similar dog breeds due to entangled features. PawMatchAI uses a unique Morphological Feature Extractor to mimic how human experts recognize breeds, focusing on structured traits.

Autonomous digital assistants like Operator from OpenAI can now order groceries for users, but oversight is crucial. The AI agent can navigate websites and complete tasks, offering a new level of convenience and intrigue.

AI bots are set to assist users on dating apps by flirting, crafting messages, and writing profiles. Experts caution against relying too heavily on artificial intelligence, as it may diminish human authenticity in relationships.