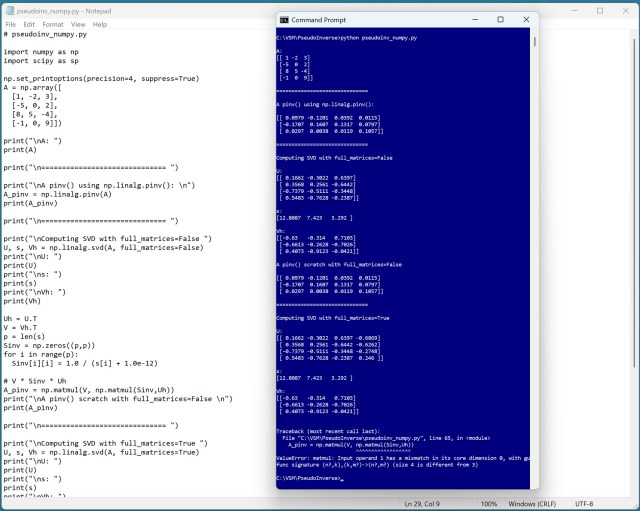

Singular value decomposition breaks down a matrix, allowing for efficient pseudo-inverse computation. Default settings can lead to failed calculations, prompting a need for manual adjustments.

George Osborne joins OpenAI to enhance ChatGPT's government connections globally. He will lead OpenAI for Countries division for national AI deployments.

India's solar energy revolution aims to empower 10 million households with rooftop installations by 2027. Tata Power and Oneture collaborate on an AI-powered solar panel inspection solution using Amazon SageMaker AI, addressing challenges of manual inspections.

TechScape: Trump's AI order analyzed, OpenAI challenges Google, data centers eye space launch.

AI-generated music by companies like Udio, Suno, and Klay is gaining mainstream popularity, with AI acts like Velvet Sundown and Xania Monet making waves. Concerns arise as major labels embrace AI, potentially leading to a future where human-made art is overshadowed by endless AI-generated content.

Amazon SageMaker HyperPod now supports elastic training, allowing ML workloads to scale automatically based on resource availability. This dynamic adaptation maximizes GPU utilization, reduces costs, and accelerates model development without manual intervention, addressing the inefficiencies of static allocation in AI infrastructure.

Amazon S3 offers high-performance for ML workloads. Optimize throughput with data shard consolidation and caching for efficiency.

Google's AI Mode is mashing up recipes from multiple creators, leading to a significant drop in ad traffic. Bloggers are seeing their content distorted and reused without permission in AI-generated cookbooks and websites.

Unsloth's open-source framework allows efficient fine-tuning of AI models on NVIDIA GPUs, enhancing accuracy for specialized tasks. Developers can choose from three main fine-tuning methods based on their goals, from parameter-efficient to reinforcement learning, to improve AI models for specific use cases.

Microbes make up 99.999% of Earth's species. MIT's Yunha Hwang uses genomic language modeling to study their diverse genomes.

Popstars like Elton John and Dua Lipa lead campaign for artists' rights in AI training, backed by 95% of respondents in government consultation. Calls for stronger copyright protection and licensing requirements gain momentum in the fight against tech companies.

Dr. Roman Raczka emphasizes AI can't replace therapist-led care for mental health. Teenagers turning to AI chatbots due to rising NHS waiting lists and lack of mental health support.

Andrew Yang revives UBI proposal to address tech-driven wealth disparities, echoing automation concerns. His "Freedom Dividend" aims to protect workers from job loss to robots.

Doctoral student Dauren Sarsenbayev from MIT NSE aims to extract heat from spent nuclear fuel, turning waste into energy. His innovative approach reframes nuclear waste as a valuable resource, offering a sustainable solution for energy production and waste management.

MIT researchers develop AI-driven robotic assembly system for rapid prototyping furniture from premade parts. System builds objects based on user descriptions, reducing waste and enabling local fabrication.