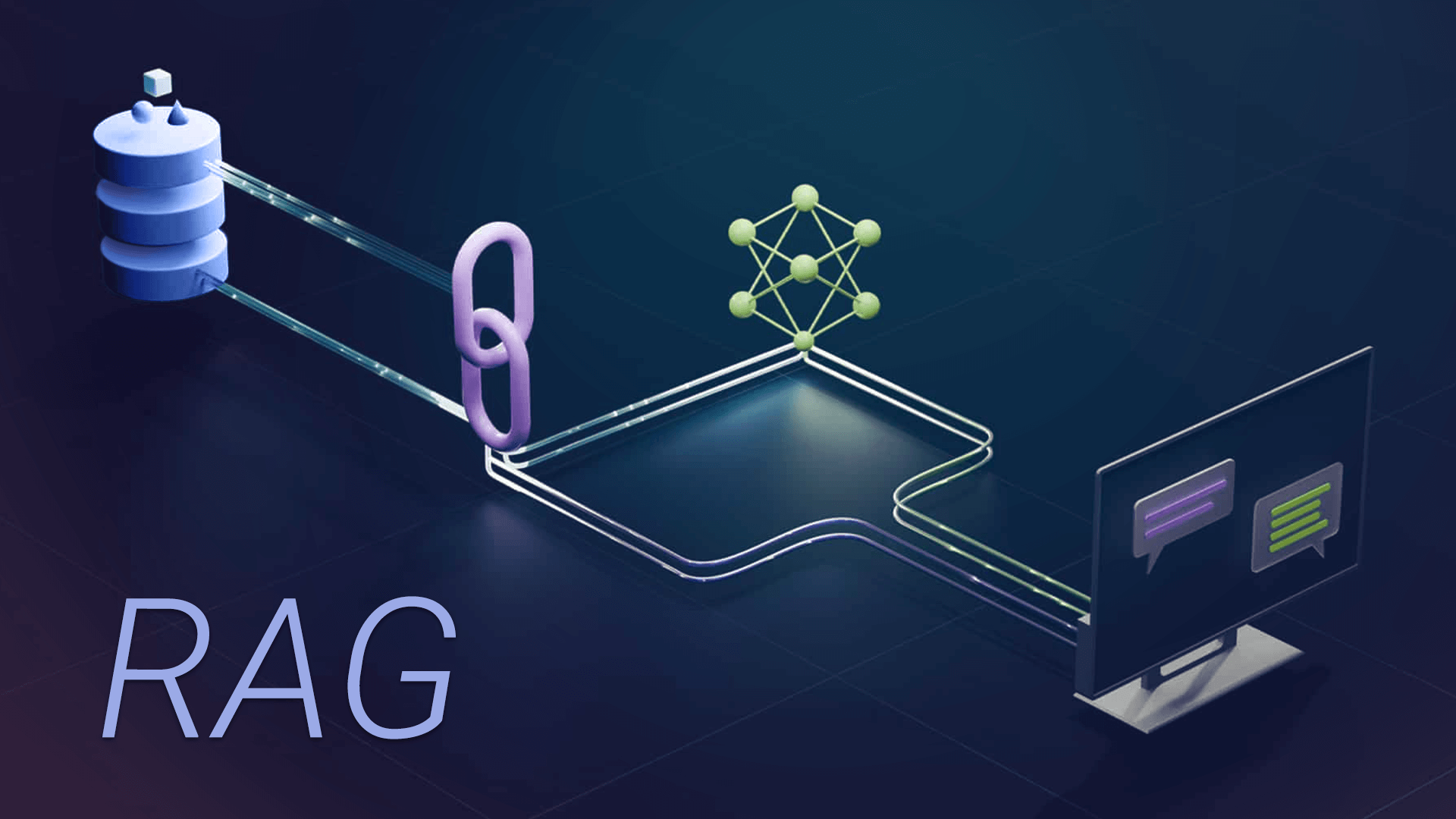

RAG explained: the AI behind Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) is a method in artificial intelligence that combines text generation with retrieving information from external sources. It allows AI models to access up-to-date data, enabling them to create more accurate and relevant responses.

Key Stages of RAG:

Key Stages of RAG:

- Retrieval: Searching for and retrieving relevant information from a knowledge base.

- Generation: Creating a response based on the retrieved information and the user query.

Summary: RAG retrieves external information before a response is generated by the LLM, enhancing the accuracy and credibility of the generated text.

In actual projects, this process may be expanded to include:

- Query Formulation: Defining and structuring a query based on user input.

- Retrieval: Searching and retrieving relevant information from a knowledge base or other external sources.

- Preprocessing: Cleansing and structuring the retrieved data for further use.

- Generation: Creating a response based on the retrieved and preprocessed information, as well as the user query.

- Postprocessing: Final editing of the generated text to ensure its accuracy and relevance to the query.

Applications of RAG

RAG-enabled LLM models have a variety of applications depending on the area of use and specific tasks. Some examples include:

1. Question Answering (QA)

RAG is used to build systems that answer user questions by extracting relevant information from large datasets. This data can come from:

- Knowledge bases (e.g., Wikidata, DBpedia)

- Corporate documents

- Scientific articles

- Web pages

- Other structured and unstructured sources

The system first finds appropriate segments and then uses them to generate an answer, ensuring accuracy and information verification.

2. Chatbots and Virtual Assistants

Chatbots based on RAG generate more accurate and contextually appropriate responses by accessing external sources to provide up-to-date information about products, services, and other aspects, ensuring users receive reliable data. This is especially useful for:

- Customer support (answers about products and services)

- Technical support

For example, a customer support chatbot could use RAG to search for answers to questions about warranties or product specifications by accessing the company's knowledge base.

3. Document Handling

RAG is effective in tasks such as:

- Automatic summarization: Creating brief summaries from large texts, like scientific articles, reports, or abstracts.

- Report generation: Compiling documents based on various sources, where information from multiple sources must be synthesized into a coherent and understandable text.

- Abstract creation: Synthesizing information from scientific publications, where the system extracts key information from documents to generate a concise, informative text.

- Document summaries: Extracting and structuring key information.

4. Personalized Recommendations

RAG can create systems that provide personalized recommendations in various fields:

- E-commerce (product selection based on preferences)

- Content platforms (article, video recommendations)

- Educational systems (personalized material selection)

For example, an e-commerce system could use RAG to find information about products that might interest a user based on their purchase history and preferences, by retrieving data from the product catalog and customer reviews.

5. Analytics and Data Processing

RAG can be applied for:

- Analyzing unstructured data

- User reviews

- Sentiment analysis on social media: For example, to analyze reviews about mobile games on platforms like Google Play and Apple Store.

- Text, image and video processing

- Extracting insights from large data volumes: Using data analysis methods to uncover useful information and patterns hidden in massive datasets. This includes:

- Trend detection: Identifying trends and changes over time.

- Behavior analysis: Understanding user or customer behavior.

- Forecasting: Using historical data to predict future events.

- Process optimization: Improving business processes based on obtained data.

For instance, companies may analyze sales data to identify the most popular products and optimize inventory. Social media can analyze user activity to improve content recommendations.

6. Content Creation

RAG is also used for:

- Generating news articles, where the system extracts current information from various sources and uses it to create informative text.

- Creating technical documentation

- Writing reviews and analytical materials

- Generating fact-based literary content, like stories or poems, supplemented with information retrieved from relevant sources.

Benefits of Using RAG

- Working with Current Data: RAG ensures the relevance of conclusions, even in rapidly changing knowledge fields.

- Increased Accuracy: Reduces the risk of errors due to up-to-date data from external sources.

- Ability to work with current data

- Reduced Model Hallucination: Using verified data reduces the likelihood of fabricated information.

- Source Traceability: Allows identifying the sources of information.

- Transparency: The ability to trace and indicate information sources makes results more explainable.

RAG Architecture and Components

1. Knowledge Base

- Vector Databases: Specialized databases for storing and searching vector representations of text.

- Traditional Databases: Relational or document-oriented databases.

- Hybrid Solutions: Combining various types of storage for optimal performance.

2. Indexing and Preprocessing

- Chunking: Splitting text into meaningful segments of optimal size.

- Embeddings: Creating vector representations of text for efficient search.

- Filtering: Removing irrelevant or low-quality content.

- Enrichment: Adding metadata and structuring information.

3. Search Mechanisms

- Semantic Search: Searching based on meaning, not keywords.

- Hybrid Search: Combining various search methods:

- Semantic search

- Full-text search

- Metadata search

- Result Ranking: Identifying the most relevant segments.

4. Prompt Engineering in RAG

- Context Formatting: Structuring found information for LLM.

- System Prompts: Special instructions for the model on working with retrieved information.

- Context Window Management: Optimizing token count for effective model performance.

Challenges

1. Technical Complexities

- Scalability: Managing large volumes of data.

- Latency: Reducing delays between the query and the response.

- Data Updates: Regularly updating and syncing information with databases.

- Cost: Expenses for computation and data storage.

2. Quality of Results

- Search Relevance: Ensuring accurate search of required information.

- Consistency of Responses: Handling contradictory data from different sources.

- Information Completeness: Avoiding missing important details.

- Credibility: Ensuring source quality.

3. Ethical Aspects

- Transparency: Explainability of system decisions.

- Confidentiality: Protecting personal and corporate data.

- Copyright: Using protected content.

- Bias: Potential data and result distortions.

Best Practices for Implementation

1. Data Preparation

- Careful preprocessing and cleaning.

- Optimal chunking strategy.

- Choosing an appropriate model for embeddings.

- Regular knowledge base updates.

2. Search Optimization

- Setting relevance parameters.

- Using caching.

- Applying filters and faceted search.

- Balancing accuracy and speed.

3. Monitoring and Improvement

- Tracking quality metrics.

- Collecting user feedback.

- A/B testing various approaches.

- Continual prompt optimization.

Future of RAG

- Multimodality: Working with various data types (text, images, audio).

- Federated Learning: Distributed RAG systems.

- Automatic Optimization: Self-tuning systems.

- Integration with Other Technologies: Combining with fine-tuning, RLHF, and other approaches.

Oleksandr Slavinskyi