New Study Combines Recurrent Neural Networks (RNN) with the Concept of Annealing to Solve Real-world Optimization Problems

Optimization problems involve determining the best viable answer from a variety of options, which can be seen frequently both in real life situations and in most areas of scientific research. However, there are many complex problems which cannot be solved with simple computer methods or which would take an inordinate amount of time to resolve.

Because simple algorithms are ineffective at solving these problems, experts around the world have worked to develop more effective strategies that can solve them within realistic time frames. Artificial neural networks (ANN) are at the heart of some of the most promising techniques explored to date.

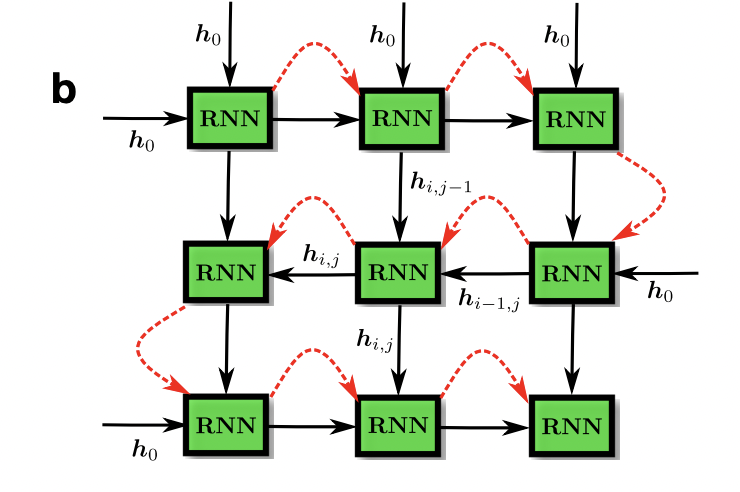

A new study by the Vector Institute, the University of Waterloo and the Perimeter Institute for Theoretical Physics in Canada presents variational neuronal annealing. This new optimization method combines recurrent neural networks (RNN) with the notion of annealing. Using a parameterized model, this innovative technique generalizes the distribution of feasible solutions to a particular problem. Its goal is to solve real-world optimization problems using a novel algorithm based on annealing theory and natural language processing (NLP) RNNs.

The proposed framework is based on the principle of annealing, inspired by metallurgical annealing, which consists of heating the material and cooling it slowly to bring it to a weaker, more resistant and more stable energy state. Simulated annealing was developed based on this process, and it seeks to identify numerical solutions to optimization problems.

The biggest distinguishing feature of this optimization method is that it combines the efficiency and processing capacity of ANNs with the advantages of simulated annealing techniques. The team used the RNNs algorithm which has shown particular promise for NLP applications. While these algorithms are typically used in NLP studies to interpret human language, researchers have reused them to solve optimization problems.

Compared to more traditional digital annealing implementations, their RNN-based method produced better decisions, increasing the efficiency of both classical and quantum annealing procedures. With autoregressive networks, researchers were able to code the annealing paradigm. Their strategy takes optimization problem solving to a new level by directly exploiting the infrastructures used to train modern neural networks, such as TensorFlow or Pytorch, accelerated by GPU and TPU.

The team performed several tests to compare the performance of the method with traditional annealing optimization methods based on numerical simulations. On many paradigmatic optimization problems, the proposed approach has gone beyond all techniques.

This algorithm can be used in a wide variety of real-world optimization problems in the future, allowing experts in various fields to resolve difficulties faster.

The researchers would like to further evaluate the performance of their algorithm on more realistic problems, as well as to compare it to the performance of existing advanced optimization techniques. They also intend to improve their technique by replacing certain components or incorporating new ones.

You can view the full article here

There is also a code on Github:

Variational Neural Annealing

Simulated Classical and Quantum Annealing