Deep active learning – a new approach to model training

A recent review article published in Intelligent Computing sheds light on the burgeoning field of deep active learning (DeepAL), which integrates active learning principles with deep learning techniques to optimize sample selection in neural network training for AI tasks.

Deep learning, known for its ability to learn intricate patterns from data, has long been hailed as a game-changer in AI. However, its effectiveness hinges on copious amounts of labeled data for training, a resource-intensive process. You can learn more about deep learning in our article Machine learning vs Deep learning: know the differences.

Active learning, on the other hand, offers a solution by strategically selecting the most informative samples for annotation, thereby reducing the annotation burden.

By combining the strengths of deep learning with the efficiency of active learning within the framework of foundation models, researchers are unlocking new possibilities in AI research and applications. Foundation models, such as OpenAI's GPT-3 and Google's BERT, are pre-trained on vast datasets and possess unparalleled capabilities in natural language processing and other domains with minimal fine-tuning.

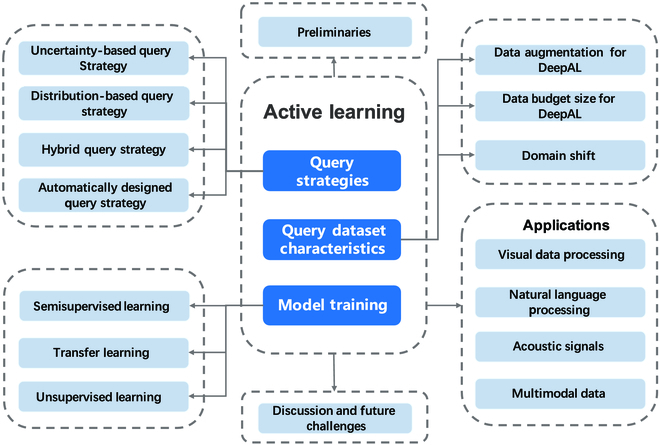

Fig.1 Schematic structure of DeepAL

Deep active learning strategies are categorized into four types: uncertainty-based, distribution-based, hybrid, and automatically designed. While uncertainty-based strategies focus on samples with high uncertainty, distribution-based strategies prioritize representative samples. Hybrid approaches combine both metrics, while automatically designed strategies leverage meta-learning or reinforcement learning for adaptive selection.

In terms of model training, the scientists discuss the integration of deep active learning with existing methods like semi-supervised, transfer, and unsupervised learning to optimize performance. It underscores the need to extend deep active learning beyond task-specific models to encompass comprehensive foundation models for more effective AI training.

One of the primary advantages of integrating deep learning with active learning is the significant reduction in annotation effort. Leveraging the wealth of knowledge encoded within foundation models, active learning algorithms can intelligently select samples that offer valuable insights, streamlining the annotation process and accelerating model training.

Moreover, this combination of methodologies leads to improved model performance. Active learning ensures that the labeled data used for training is diverse and representative, resulting in better generalization and enhanced model accuracy. With foundation models providing a solid foundation, active learning algorithms can exploit rich representations learned during pre-training, yielding more robust AI systems.

Cost-effectiveness is another compelling benefit. By reducing the need for extensive manual annotation, active learning significantly lowers the overall cost of model development and deployment. This democratizes access to advanced AI technologies, making them more accessible to a wider range of organizations and individuals.

Furthermore, the real-time feedback loop enabled by active learning fosters iterative improvement and continuous learning. As the model interacts with users to select and label samples, it refines its understanding of the data distribution and adapts its predictions accordingly. This dynamic feedback mechanism enhances the agility and responsiveness of AI systems, allowing them to evolve alongside evolving data landscapes.

However, challenges remain in harnessing the full potential of deep learning and active learning with foundation models. Accurately estimating model uncertainty, selecting appropriate experts for annotation, and designing effective active learning strategies are key areas that require further exploration and innovation.

In conclusion, the convergence of deep learning and active learning in the era of foundation models represents a significant milestone in AI research and applications. By leveraging the capabilities of foundation models and the efficiency of active learning, researchers and practitioners can maximize the efficiency of model training, improve performance, and drive innovation across diverse domains.