How sound can model the world

Researchers at the Massachusetts Institute of Technology (MIT) and MIT-IBM's Watson AI Lab are exploring ways to use spatial acoustic information to help machines better represent their environment. Scientists developed a machine learning model that can capture how any sound in a room will travel through space, allowing the model to simulate what a listener will hear in different locations.

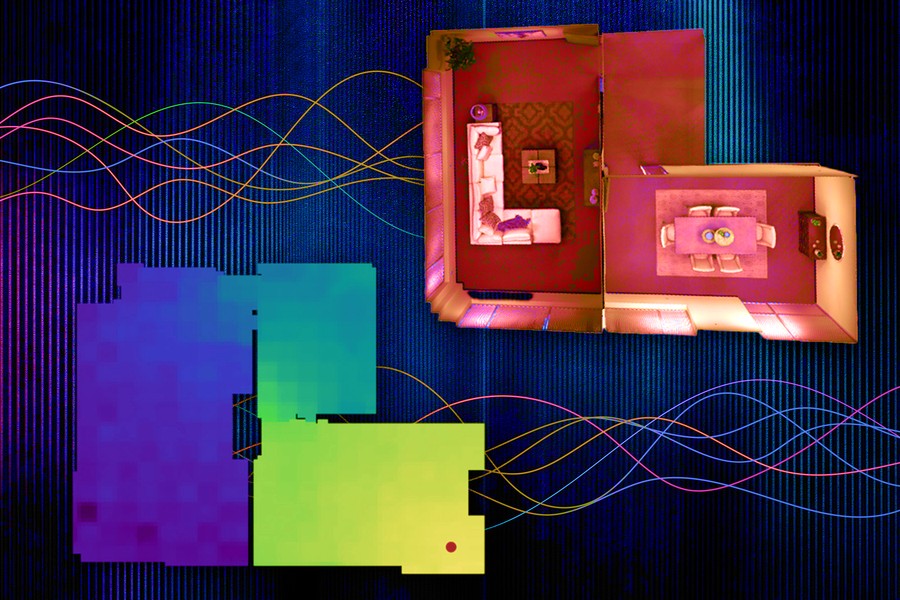

Thanks to the accurate simulation of the acoustics of the place, the system can learn the basic 3D geometry of the room from sound recordings. Researchers use the acoustic information their system collects to create a precise visual representation of a room, similar to the way humans use sound when evaluating the properties of their physical environment.

In addition to all the potential ways of its usage in virtual and augmented reality, this method can help AI agents better understand the surrounding world. Thus, according to Yilun Du, a co-author of an article describing the model and a graduate student in the Department of Electrical Engineering and Computer Science (EECS): "By modeling the acoustic properties of the sound in its environment, an underwater exploration robot could sense things that are farther away than it could with vision alone".

“Most researchers have only focused on modeling vision so far. But as humans, we have multimodal perception. Not only is vision important, sound is also important. I think this work opens up an exciting research direction on better utilizing sound to model the world,” Du says.

Learn more about how using sound can model the world at https://news.mit.edu/2022/sound-model-ai-1101