Mamba-3 – the next evolution in language modeling

A new chapter in AI sequence modeling has arrived with the launch of Mamba-3, an advanced neural architecture that pushes the boundaries of performance, efficiency, and capability in large language models (LLMs).

Mamba-3 builds on a lineage of innovations that began with the original Mamba architecture in 2023. Unlike Transformers, which have dominated language modeling for nearly a decade, Mamba models are rooted in state space models (SSMs) – a class of models originally designed to predict continuous sequences in domains like control theory and signal processing.

Transformers, while powerful, suffer from quadratic scaling in memory and compute with sequence length, creating bottlenecks in both training and inference. Mamba models, by contrast, achieve linear or constant memory usage during inference, allowing them to handle extremely long sequences efficiently. Mamba has demonstrated the ability to match or exceed similarly sized Transformers on standard LLM benchmarks while drastically reducing latency and hardware requirements.

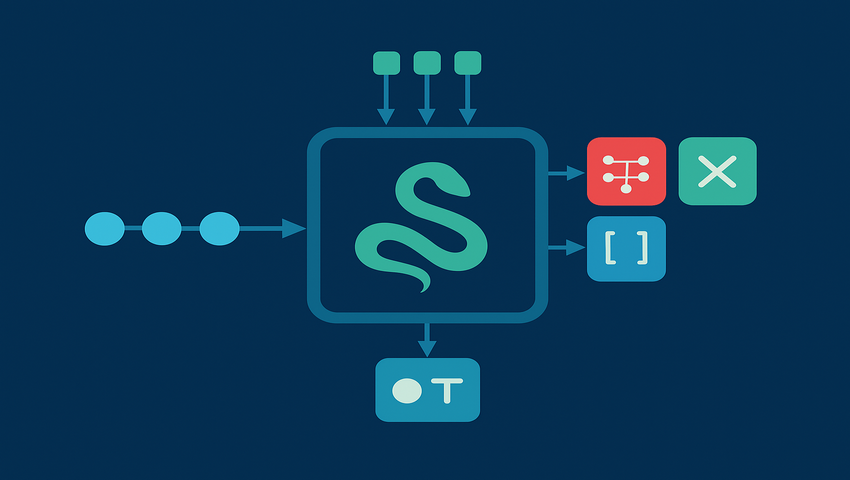

Mamba’s unique strength lies in its selective state space (S6) model, which provides Transformer-like selective attention capabilities. By dynamically adjusting how it prioritizes historical input, Mamba models can focus on relevant context while “forgetting” less useful information – a feat achieved via input-dependent state updates. Coupled with a hardware-aware parallel scan, these models can perform large-scale computations efficiently on GPUs, maximizing throughput without compromising quality.

Mamba-3 introduces several breakthroughs that distinguish it from its predecessors:

- Trapezoidal Discretization – Enhances the expressivity of the SSM while reducing the need for short convolutions, improving quality on downstream language tasks.

- Complex State-Space Updates – Allows the model to track intricate state information, enabling capabilities like parity and arithmetic reasoning that previous Mamba models could not reliably perform.

- Multi-Input, Multi-Output (MIMO) SSM – Boosts inference efficiency by improving arithmetic intensity and hardware utilization without increasing memory demands.

These innovations, paired with architectural refinements such as QK-normalization and head-specific biases, ensure that Mamba-3 not only delivers superior performance but also takes full advantage of modern hardware during inference.

Extensive testing shows that Mamba-3 matches or surpasses Transformer, Mamba-2, and Gated DeltaNet models across language modeling, retrieval, and state-tracking tasks. Its SSM-centric design allows it to retain long-term context efficiently, while the selective mechanism ensures only relevant context influences output – a critical advantage in sequence modeling.

Despite these advances, Mamba-3 does have limitations. Fixed-state architectures still lag behind attention-based models when it comes to complex retrieval tasks. Researchers anticipate hybrid architectures, combining Mamba’s efficiency with Transformer-style retrieval mechanisms, as a promising path forward.

Mamba-3 represents more than an incremental update – it is a rethinking of how neural architectures can achieve speed, efficiency, and capability simultaneously. By leveraging the principles of structured SSMs and input-dependent state updates, Mamba-3 challenges the dominance of Transformers in autoregressive language modeling, offering a viable alternative that scales gracefully with both sequence length and hardware constraints.