Meta has developed an AI model that can convert brain activity into speech

The company has shared its research about an AI model that can decode speech from noninvasive recordings of brain activity. It has potential to help people after traumatic brain injury, which left them unable to communicate through speech, typing, or gestures.

Decoding speech based on brain activity has been a long-established goal of neuroscientists and clinicians, but most of the progress has relied on invasive brain recording techniques, such as stereotactic electroencephalography and electrocorticography.

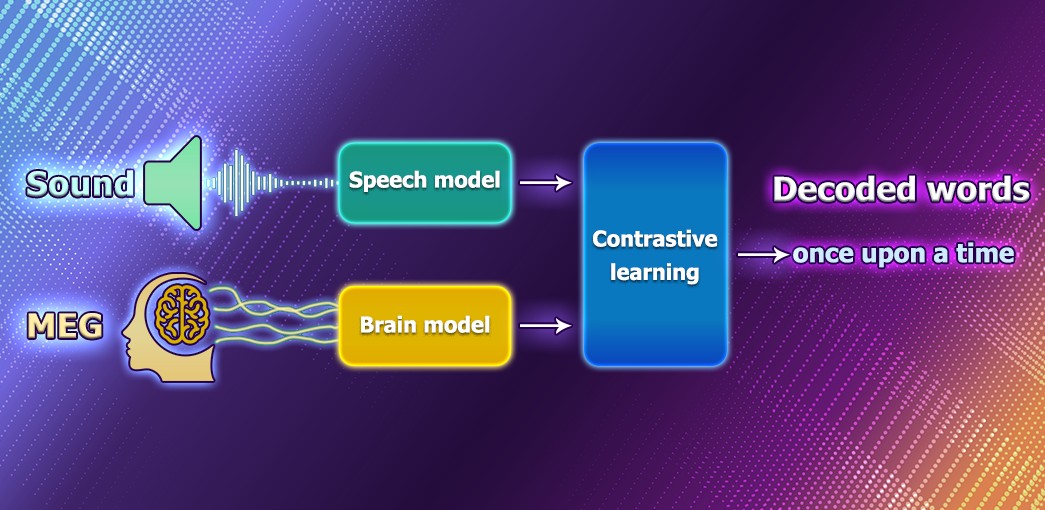

Meantime researchers from Meta think that converting speech via noninvasive methods would provide a safer, more scalable solution that could ultimately benefit more people. Thus, they created a deep learning model trained with contrastive learning and then used it to align noninvasive brain recordings and speech sounds.

To do this, scientists used an open source, self-supervised learning model wave2vec 2.0 to identify the complex representations of speech in the brains of volunteers while listening to audiobooks.

The process includes an input of electroencephalography and magnetoencephalography recordings into a “brain” model, which consists of a standard deep convolutional network with residual connections. Then, the created architecture learns to align the output of this brain model to the deep representations of the speech sounds that were presented to the participants.

After training, the system performs what’s known as zero-shot classification: with a snippet of brain activity, it can determine from a large pool of new audio files which one the person actually heard.

According to Meta: “The results of our research are encouraging because they show that self-supervised trained AI can successfully decode perceived speech from noninvasive recordings of brain activity, despite the noise and variability inherent in those data. These results are only a first step, however. In this work, we focused on decoding speech perception, but the ultimate goal of enabling patient communication will require extending this work to speech production. This line of research could even reach beyond assisting patients to potentially include enabling new ways of interacting with computers.”

Learn more about the research here