Philosophers vs Transformers: Neural net impersonates a famous cognitive scientist

Can computers think? Can AI models be conscious? These and similar questions often pop up in discussions of recent AI progress, achieved by natural language models GPT-3, LAMDA and other transformers. They are nonetheless still controversial and on the brink of a paradox, because there are usually many hidden assumptions and misconceptions about how the brain works and what thinking means. There is no other way, but to explicitly reveal these assumptions and then explore how the human information processing could be replicated by machines.

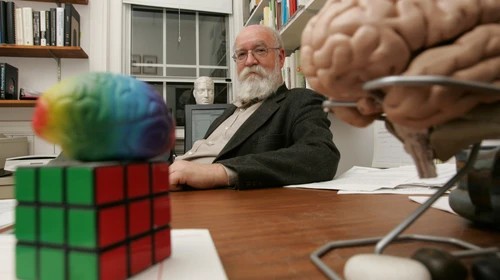

Recently a team of AI scientists undertook an interesting experiment. Using the popular GPT-3 neural model by Open AI, they fine-tuned it on the full corpus of the works of Daniel Dennett, an American philosopher, writer, and cognitive scientist whose research centers on the philosophy of mind and science. The aim, as stated by the researchers, was to see whether the AI model could answer philosophical questions similarly to how the philosopher himself would answer those questions. Dennett himself took part in the experiment, and answered ten philosophical questions, which then were fed into the fine-tuned version of the GPT-3 transformer model.

The experiment set-up was simple and straightforward. Ten questions were posed to both the philosopher and the computer. An example of questions is : ”Do human beings have free will? What kind or kinds of freedom are worth having?” The AI was prompted with the same questions augmented with context assuming the questions comes within an interview with Dennett. The answers from the computer were then filtered by the following algorithm: 1) the answer was truncated to be of approximately the same length as the human’s response; 2) the answers containing revealing words (like, “interview'') were dropped. Four AI generated answers were obtained for every question and no cherry-picking or editing was done.

How were the results evaluated? The reviewers were presented with a quiz, and the aim was to select the “correct” answer from the batch of five, where the other four were from artificial intelligence. The quiz is available online for anyone to try their detective skill, and we recommend you try it to see if you could fare better than experts:

https://ucriverside.az1.qualtrics.com/jfe/form/SV_9Hme3GzwivSSsTk

The result was not entirely unexpected. “Even knowledgeable philosophers who are experts on Dan Dennett’s work have substantial difficulty distinguishing the answers created by this language generation program from Dennett’s own answers,” said the research leader. The participant’s answers were not much higher than a random guess on some of the questions and a bit better on the others.

What insights can we obtain from this research? Does it mean that GPT-like models are able to replace humans soon in many fields? Does it have anything to do with thinking, natural language understanding, and artificial general intelligence? Will machine learning produce human-level results and when? These are important and interesting questions and we are still far from the final answers.