Spray-on smart skin uses AI to swiftly interpret hand tasks

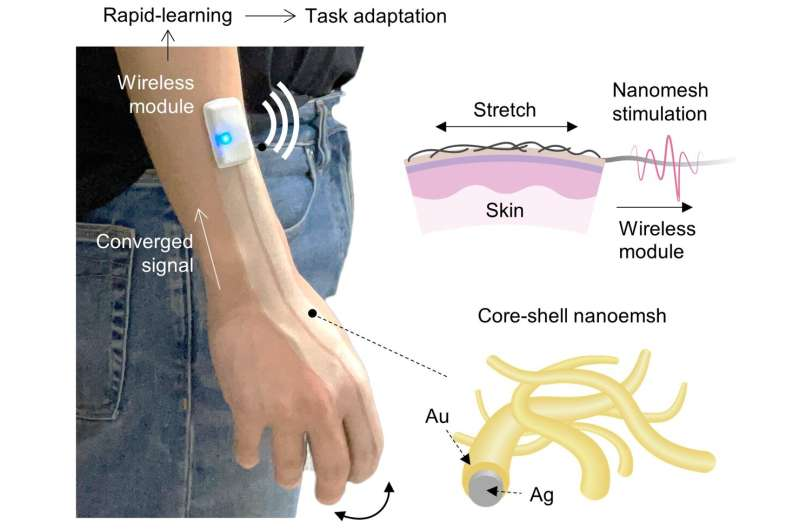

Researchers from Stanford University developed smart skin — a new type of stretchable biocompatible material that gets sprayed on the back of the hand, like suntan spray. Integrated in the mesh is a tiny electrical network that senses as the skin stretches and bends.

Using artificial intelligence, this new skin can help researchers understand numerous daily tasks from hand motions and gestures. The scientists believe it could have applications and implications in fields, such as: gaming, sports, telemedicine, and robotics.

The innovation here is a sprayable electrically sensitive mesh network built into the polyurethane, which is a durable, yet stretchable material. The mesh consists of millions of nanowires that are in contact with each other to form dynamic electrical pathways. This mesh is electrically active, biocompatible, breathable, and stays on unless rubbed in soap and water. It can conform intimately to the wrinkles and folds of each human finger.

"As the fingers bend and twist, the nanowires in the mesh get squeezed together and stretched apart, changing the electrical conductivity of the mesh. These changes can be measured and analyzed to tell us precisely how a hand or a finger or a joint is moving," — explained Zhenan Bao, a K.K. Lee Professor of Chemical Engineering and senior author of the study.

The researchers decided on the approach to spraying directly on skin so that the mesh is maintained without a substrate. This key engineering solution eliminated unwanted motion inaccuracy and allowed them to use a single trace of conductive mesh to generate multi-joint information of the fingers.

With machine learning, computers can monitor the changes in conductivity models and map those changing patterns to specific physical tasks and gestures. For example, enter an X on a keyboard, and the algorithm learns to recognize that task from the variable patterns in the electrical conductivity. Once the algorithm is suitably trained, the physical keyboard is no longer required. The same principles can be used to recognize sign language or even to recognize objects by tracing their exterior surfaces.