Voice-activated manufacturing: from words to reality in minutes

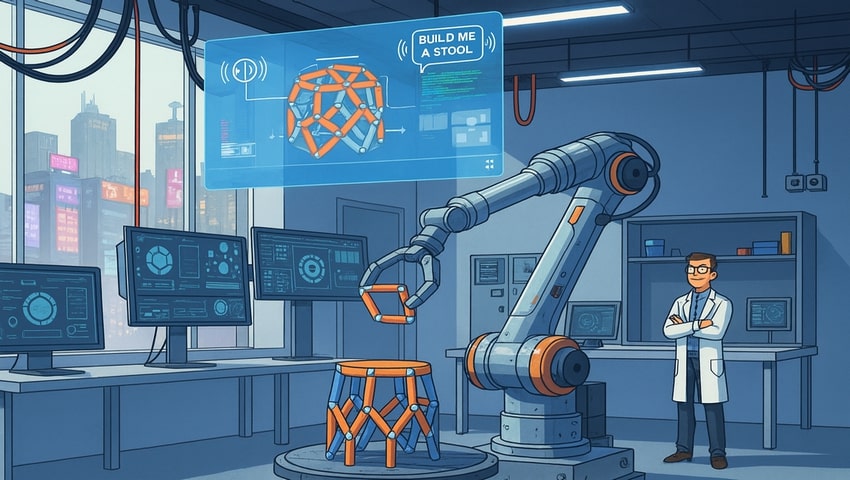

The line between science fiction and reality is getting blurrier thanks to MIT researchers who have developed a system that can turn spoken commands into physical objects within minutes. The “Speech-to-Reality” platform integrates natural language processing, 3D generative AI, geometric analysis, and robotic assembly. The platform enables on-demand fabrication of furniture, functional and decorative items without requiring users to have expertise in 3D modeling or robotics.

The system workflow begins with speech recognition, converting a user’s spoken input into text. A large language model (LLM) interprets the text to identify the requested physical object while filtering out abstract or non-actionable commands. The processed request serves as input to a 3D generative AI model, which produces a digital mesh representation of the object.

Because AI-generated meshes are not inherently compatible with robotic assembly, the system applies a component discretization algorithm that divides the mesh into modular cuboctahedron units. Each unit measures 10 cm per side and is designed for magnetic interlocking, enabling reversible, tool-free assembly. Geometric processing algorithms then verify assembly feasibility, addressing constraints such as inventory limits, unsupported overhangs, vertical stacking stability, and connectivity between components. Directional rescaling and connectivity-aware sequencing ensure structural integrity and prevent collisions during robotic assembly.

An automated path planning module, built on the Python-URX library, generates pick-and-place trajectories for a six-axis UR10 robotic arm equipped with a custom gripper. The gripper’s passive alignment indexers ensure precise placement even with slight component wear. Assembly occurs layer by layer, following a connectivity-prioritized order to guarantee grounded and stable construction. A conveyor system recirculates components for subsequent builds, enabling sustainable, circular production.

The system has demonstrated rapid assembly of various objects, including stools, tables, shelves, and decorative items like letters or animal figures. Objects with large overhangs, tall vertical stacks, or branching structures are successfully fabricated thanks to constraint-aware geometric processing. Calibration of the robotic arm’s velocity and acceleration further ensures reliable operation without inducing structural instability.

While the current implementation uses 10 cm modular units, the system is modular and scalable, allowing for smaller components for higher-resolution builds and potential integration with hybrid manufacturing techniques. Future iterations could incorporate augmented reality or gesture-based control for multimodal interaction, as well as fully automated disassembly and adaptive modification of existing objects.

The Speech-to-Reality platform represents a technical framework for bridging AI-driven generative design with physical fabrication. By combining language understanding, 3D AI, discrete assembly, and robotic control, it enables rapid, on-demand, and sustainable creation of physical objects, providing a pathway for scalable human-AI co-creation in real-world environments.