W2V-BERT: Combining Contrastive Learning and Masked Language Modeling for Self-supervised Speech Pre-training

Motivated by the success of masked language modeling (MLM) in pre-training natural language processing models, the developers propose w2v-BERT that explores MLM for self-supervised speech representation learning.

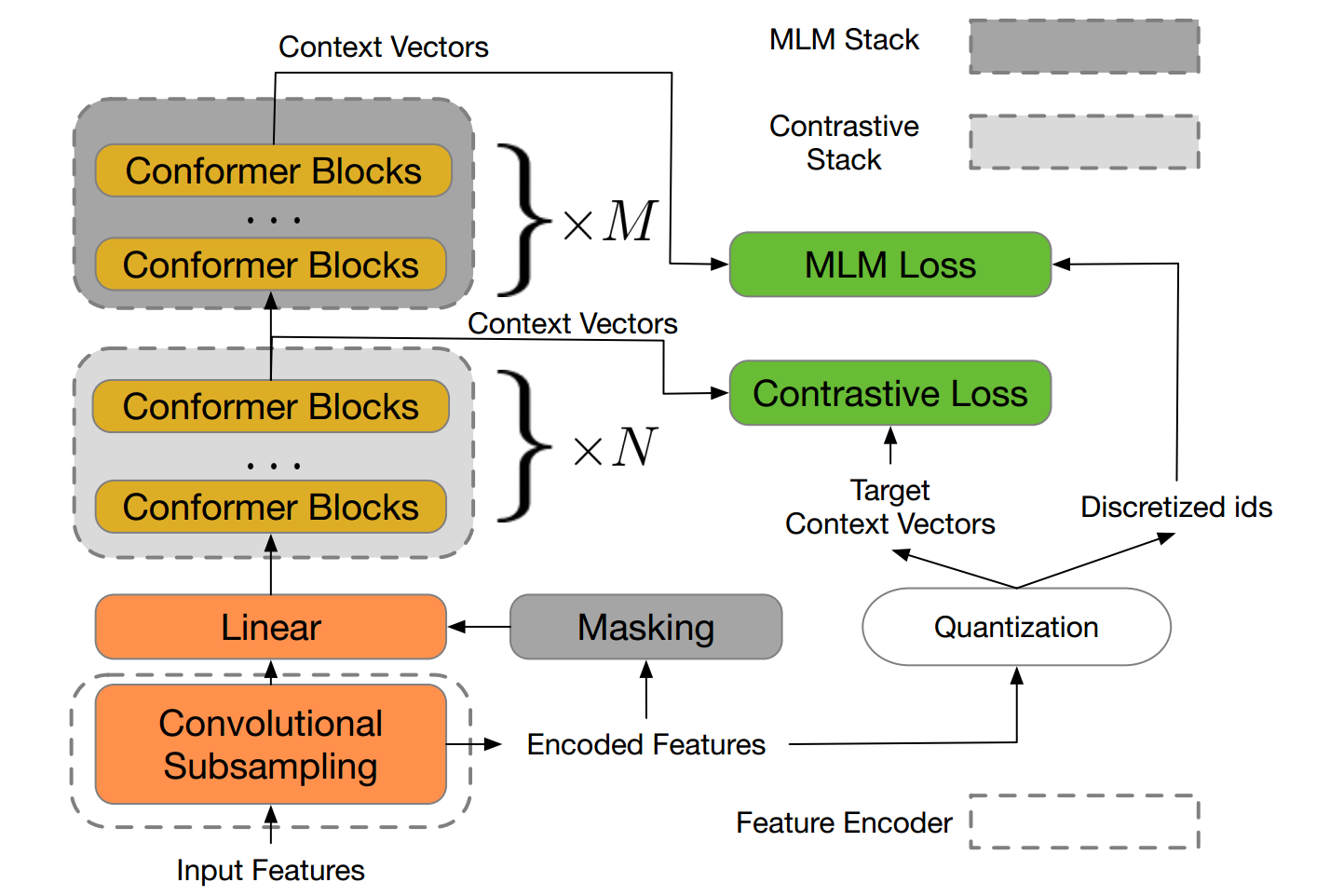

w2v-BERT is a framework that combines contrastive learning and MLM, where the former trains the model to discretize input continuous speech signals into a finite set of discriminative speech tokens, and the latter trains the model to learn contextualized speech representations via solving a masked prediction task consuming the discretized tokens.

In contrast to existing MLM-based speech pre-training frameworks such as HuBERT, which relies on an iterative re-clustering and re-training process, or vq-wav2vec, which concatenates two separately trained modules, w2v-BERT can be optimized in an end-to-end fashion by solving the two self-supervised tasks (the contrastive task and MLM) simultaneously.

The experiments show that w2v-BERT achieves competitive results compared to current state-of-the-art pre-trained models on the LibriSpeech benchmarks when using the Libri-Light~60k corpus as the unsupervised data.

In particular, when compared to published models such as conformer-based wav2vec~2.0 and HuBERT, the represented model shows 5% to 10% relative WER reduction on the test-clean and test-other subsets. When applied to Google's Voice Search traffic dataset, w2v-BERT outperforms our internal conformer-based wav2vec~2.0 by more than 30% relatively.

You can view the full article here

There is also a tutorial video on YouTube.