In 2025, AI moved from hype to reality, becoming a core force shaping how we create content, browse the web, work, and make decisions. This article explores the biggest shifts: the rise of agentic AI and vibe coding, tightening regulation, and the growing battle over quality and trust.

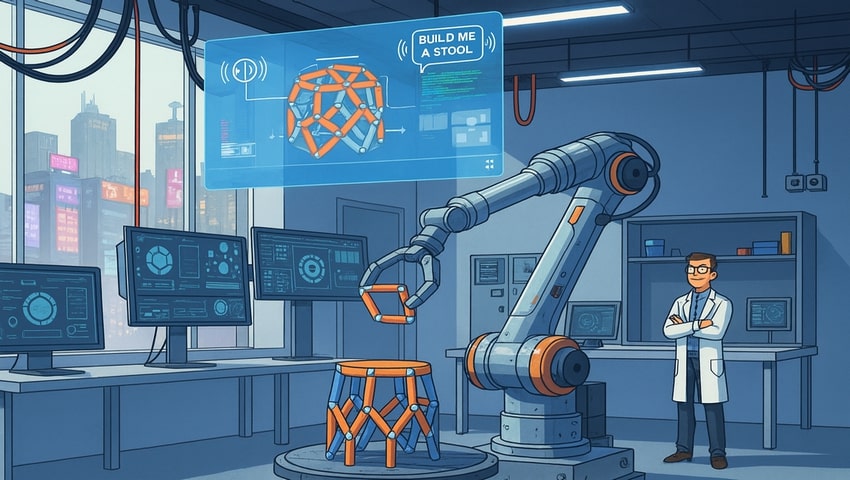

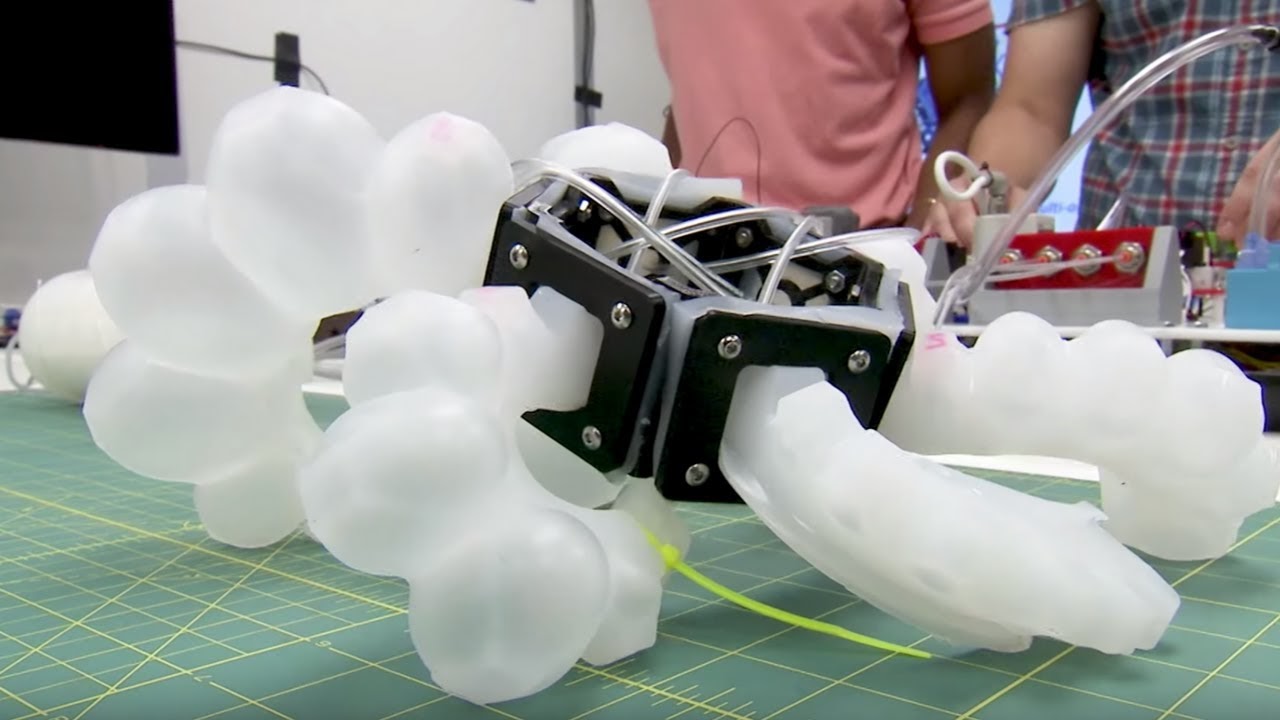

The Speech-to-Reality system transforms spoken commands into physical objects using a combination of natural language processing, 3D generative AI, and robotic assembly. The system enables users to request items like chairs, stools, or shelves and have them assembled by a robotic arm in as little as five minutes.

Scientists have engineered a transneuron – an artificial neuron capable of mimicking the activity of multiple regions of the human brain. This advancement could accelerate the development of robots with human-like perception, adaptability, and learning.

FlyingToolbox is a drone system capable of docking and exchanging tools mid-air, even in turbulent airflow. This technology enables precise multi-stage operations: from maintenance and high-altitude construction to emergency response missions.

Google DeepMind has introduced Gemini Robotics – an AI system that allows robots to “think before acting,” plan complex tasks, and even transfer skills across different robot types. With advanced reasoning, safety features, and cross-embodiment learning, robots become truly intelligent.

Now AI can autonomously generate the “brains” of robots, creating a fully functional drone control system 20 times faster than humans. The experiment with generative AI models like ChatGPT, Gemini, and Claude reveals both the potential and current limits of machines building machines.

The new system enables groups of robots to act as a unified team. The MultiRobot FrameWork lets robots share real-time information about their environment, positions, and tasks, mirroring the collective behavior seen in insect colonies, but powered by advanced sensors and computation.

Skydweller is a solar-powered drone designed for long-endurance missions. With AI-powered radar, self-healing systems, and the ability to fly autonomously for up to 90 days straight, it sets a new standard for persistent aerial surveillance and data collection.

ATMO is a robot that transforms mid-air from a flying drone into a ground rover. By overcoming the long-standing challenge of hybrid robots getting stuck on rough terrain, this breakthrough unlocks new possibilities for autonomous delivery, disaster response, and planetary exploration.

MIT researchers have developed MiFly, a low-power, RF-based system that enables drones to self-localize in complete darkness, indoors, and in low-visibility conditions. By using a single backscatter tag and dual-polarization radar, MiFly navigates without relying on visual cues or external infrastructure.

The two-trajectory planning system lets MAVs explore unknown paths while always maintaining a safe backup route. Powered by LiDAR-based perception and the CIRI algorithm, drones dynamically generate real-time flight paths for high-speed navigation in unpredictable environments.

The two-trajectory planning system lets MAVs explore unknown paths while always maintaining a safe backup route. Powered by LiDAR-based perception and the CIRI algorithm, drones dynamically generate real-time flight paths for high-speed navigation in unpredictable environments.

The Indian Patent Office has granted a patent for the innovative landing system for mini-UAVs. This technology enables precise landings in challenging terrains and has potential applications in both military and civilian logistics, including high-altitude deliveries and emergency.

A low-cost, innovative accident avoidance system for drones uses onboard sensors and cameras to autonomously prevent mid-air collisions. This technology is crucial for UAV operations, ensuring safety and efficiency in increasingly crowded airspaces.

A new computer vision system significantly reduces energy consumption while providing real-time, realistic spatial awareness. It enhances AI systems' ability to accurately perceive 3D space – crucial for technologies like self-driving cars and UAVs.

Researchers created insect-inspired autonomous navigation strategies for tiny, lightweight robots. Tested on a 56-gram drone, the system enables it to return home after long journeys using minimal computation and memory.

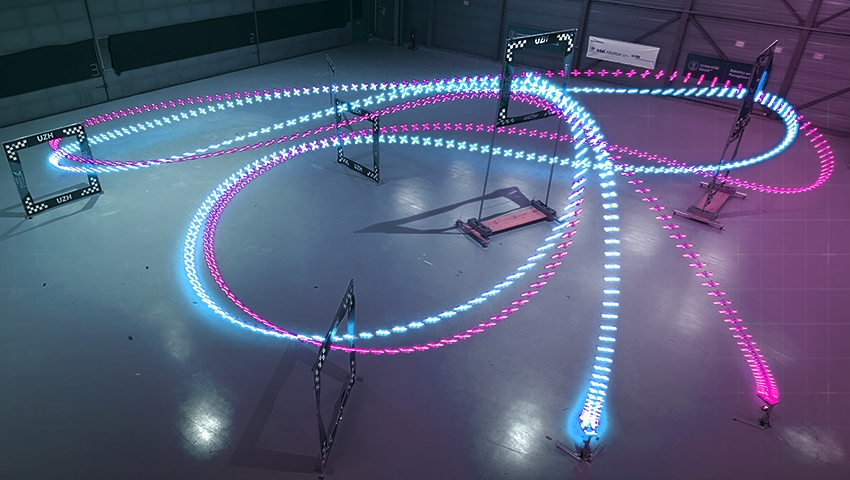

Drone racing has been used to test neural networks for future space missions. This project aims to autonomously manage complex spacecraft maneuvers, optimizing onboard operations and enhancing mission efficiency and robustness.

Inspired by ants, researchers from the Universities of Edinburgh and Sheffield are developing an artificial neural network to help robots recognize and remember routes in complex natural environments.

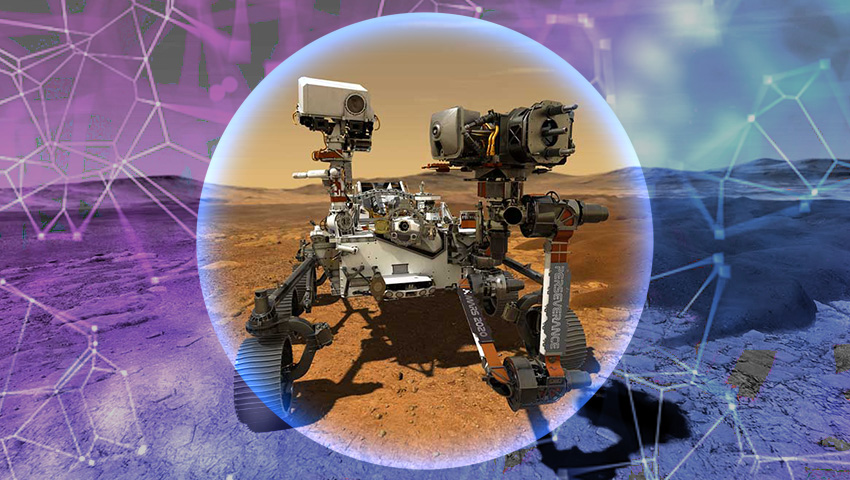

The European Space Agency is developing a sample retrieval system using neural networks, aiming to collect and transport samples from Mars. The challenging mission of returning samples gathered by Perseverance rover is considered crucial for unlocking the mysteries of the Red Planet.

Researchers are working on a more effective way to train machines for uncertain, real-world situations. A new algorithm will decide when a “student” machine should follow its teacher, and when it should learn on its own.

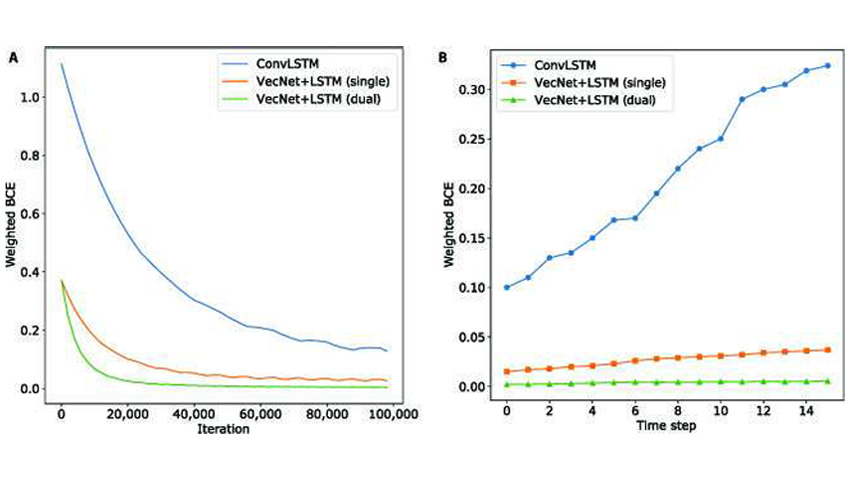

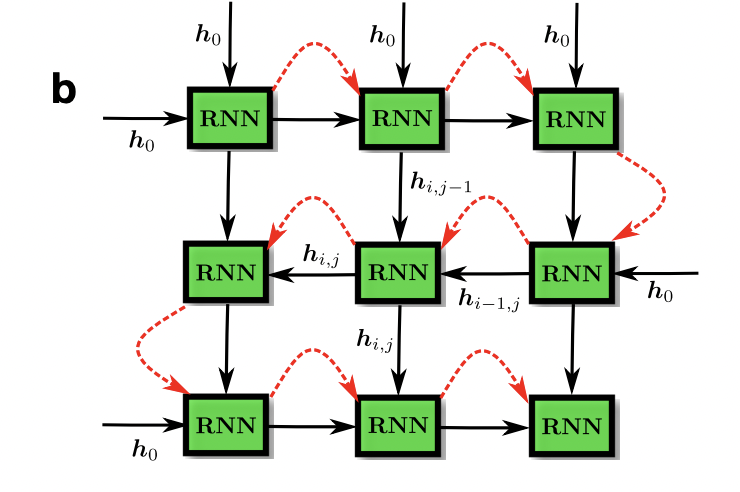

Researchers developed a new approach to motion modeling using relative position change. They evaluated the ability of deep neural networks architectures to model motion using motion recognition and prediction tasks.

Researchers designed a new AI algorithm that is designed to visualize data clusters and other macroscopic features in a way that they are as distinct, easy to observe and human-understandable as possible.

Scholars has developed DetectGPT that can distinguish AI-generated text from human-written text 95% of the time for popular open source LLMs.

Researchers have recently created a new neuromorphic computing system supporting deep belief neural networks (DBNs) - a generative and graphical class of deep learning models.

A team of scientists has developed a machine learning solution to forecast amine emissions from carbon-capture plants using experimental data from a stress test performed at an actual plant in Germany.

Scientists have developed the first bio-realistic artificial neuron that can effectively interact with real biological neurons.

Scientists presented a smart bionic finger that can create 3D maps of the internal structure of materials by touching their exterior surface.

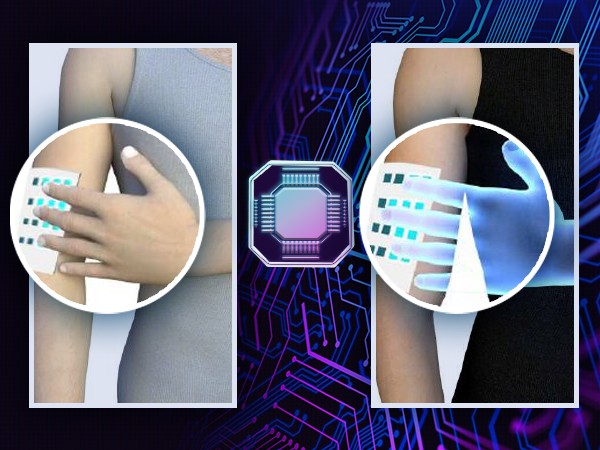

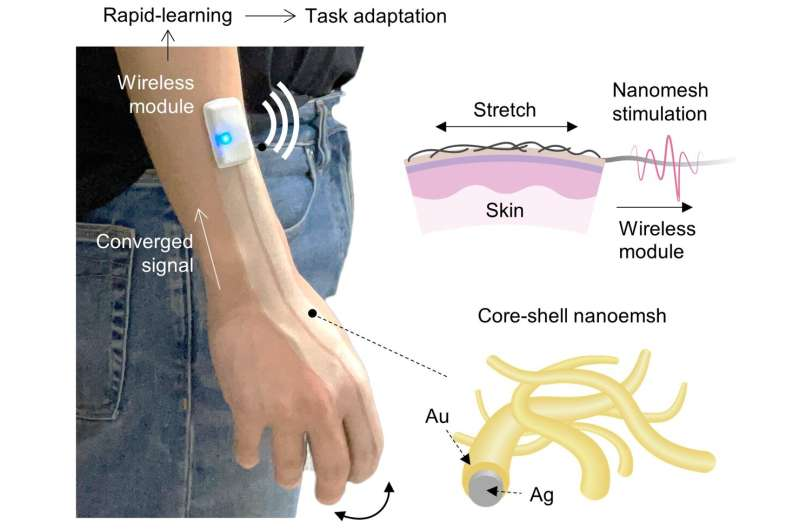

The wireless soft e-skin can both detect and transmit the sense of touch, and form a sensory network, which opens up great possibilities for improving interactive sensory communication.

Meta AI launched LLaMA, a collection of foundation language models that can compete with or even outperform the best existing models such as GPT-3, Chinchilla and PaLM.

MusicLM is a new music generation AI that creates high-quality music based on textual descriptions in a similar way that DALL-E generates images from texts.

Scientists from the University of Michigan conducted a study of robot behavior strategies to restore trust between a bot and a human. Can such strategies fully restore trust and how effective are they after repeated errors?

A group of researchers have created a Bayesian machine, an AI approach that performs computations based on Bayes' theorem, using memristors. It is significantly more energy-efficient than existing hardware solutions, and could be used for safety-critical applications.

Using advances in artificial intelligence engineers at the University of Colorado Boulder are working on a new type of walking cane for blind or visually impaired.

Tel Aviv University researchers have achieved a technological-biological breakthrough: in response to the presence of an odor, the new biological sensor sends data that the robot is able to detect and interpret.

Text-to-speech models usually require significantly longer training samples, while VALL-E creates a much more natural-sounding synthetic voice from just a few seconds.

Researchers from Stanford University developed a new type of stretchable biocompatible material that gets sprayed on the back of the hand and can recognize its movements.

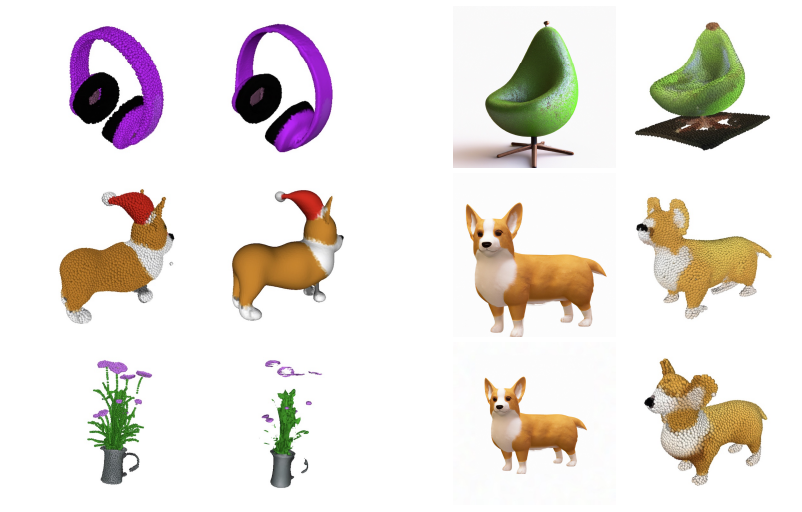

Point·E is a new system for text-conditional synthesis of 3D point clouds that first generates synthetic views and then generates colored point clouds conditioned on these views.

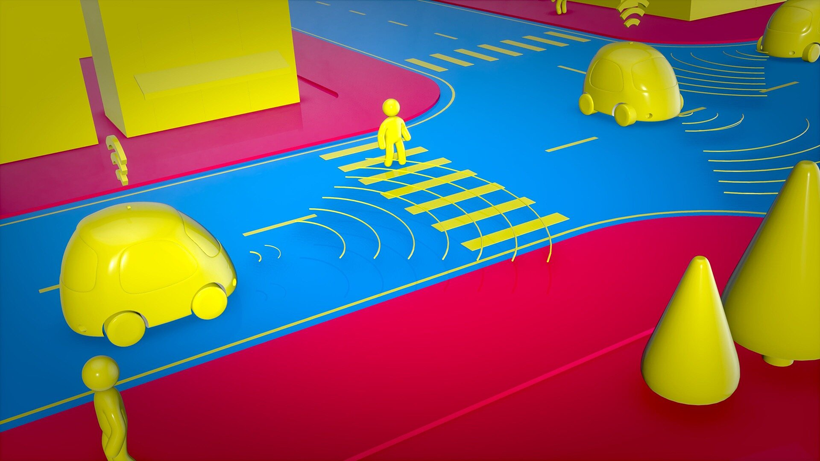

Self-driving cars have long been considered the next generation mode of transportation. To enable autonomous navigation of such vehicles numerous different technologies need to be implemented.

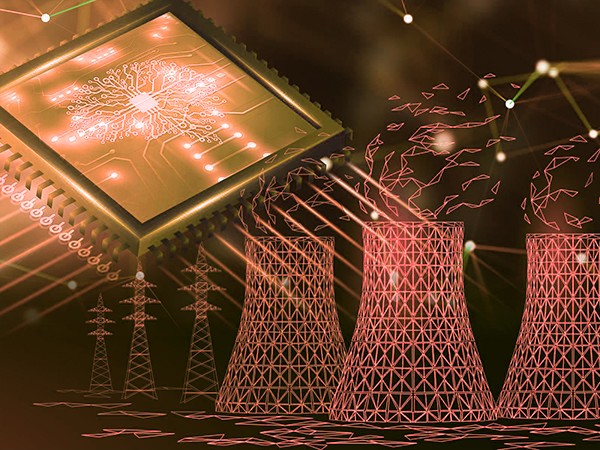

New research from the Pacific Northwest National Laboratory uses machine learning, data analysis and artificial intelligence to identify potential nuclear threats.

Researchers have discovered new ways for retailers to use AI in conjunction with in-store cameras to better understand consumer behavior and adapt store layouts to maximize sales.

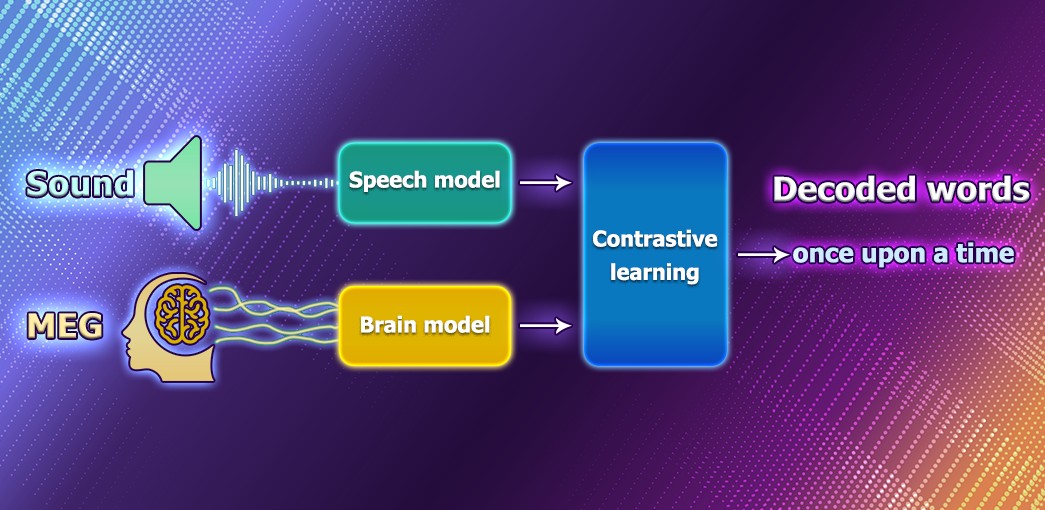

Decoding speech based on brain activity has been a long-established goal of neuroscientists and clinicians. Nowdays, Meta is working on an AI model that can decode speech from noninvasive recordings of brain activity to help people after traumatic brain injury.

Look to Speak is designed to help those with motor function impairments and speech difficulties to communicate more easily. The app lets people use their eyes to select pre-written phrases and have them spoken out loud.

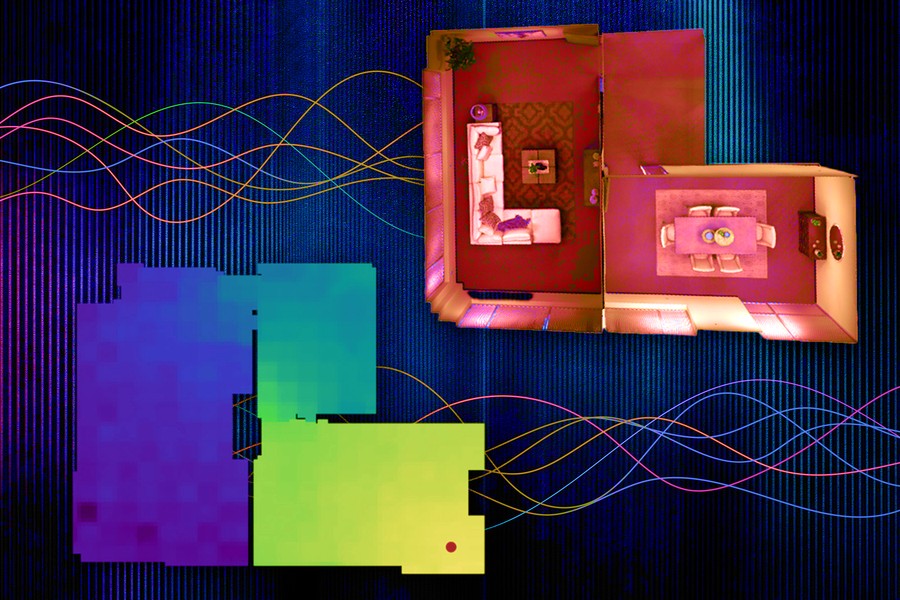

MIT researchers have developed a machine-learning technique that precisely collects and models the underlying acoustics of a location from just a limited number of sound recordings.

By 2050 humanity will have to almost double the global food supply to make sure that every dweller of the planet has enough food. With climate change going at increasing speed, water resources drop and arable lands erode, doing that sustainably will be a huge challenge for us.

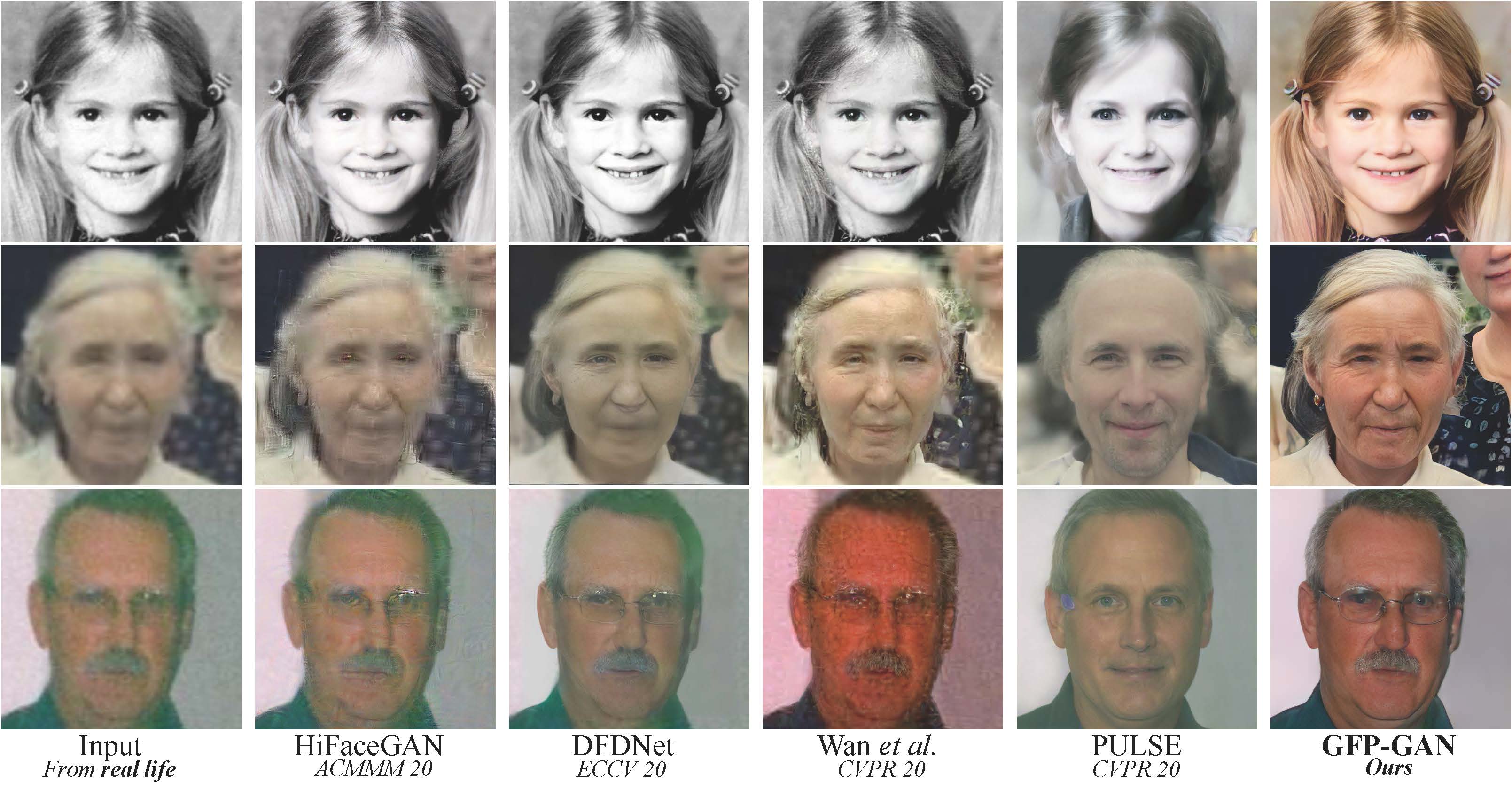

During the last decade, one of the biggest issues in the gaming industry is the explosive growth of the AAA video games production cost. Studios are always on the look-up for technologies that could help bring down the cost of game development. Recent advances in the neural image generation models bring some hope that the realization of this dream may be not so far away.

Can computers think? Can AI models be conscious? These and similar questions often pop up in discussions of recent AI progress, achieved by natural language models GPT-3, LAMDA and other transformers. They are nonetheless still controversial and on the brink of a paradox, because there are usually many hidden assumptions and misconceptions about how the brain works and what thinking means. There is no other way, but to explicitly reveal these assumptions and then explore how the human information processing could be replicated by machines.

Now you won’t surprise anyone with filters that improve the quality of photos. But the restoration of old portraits still leaves much to be desired. Older photos tend to be too blurry, so normal image sharpening methods won't work on them.

Facebook has released the NLLB project (No Language Left Behind). The main feature of this development is the coverage of more than two hundred languages, including rare languages of African and Australian peoples. In addition, Facebook has applied a new approach to the machine learning model, where the translation is carried out directly from one language to another, without intermediate translation into English.

A group of scientists using machine learning "rediscovered" the law of universal gravitation.

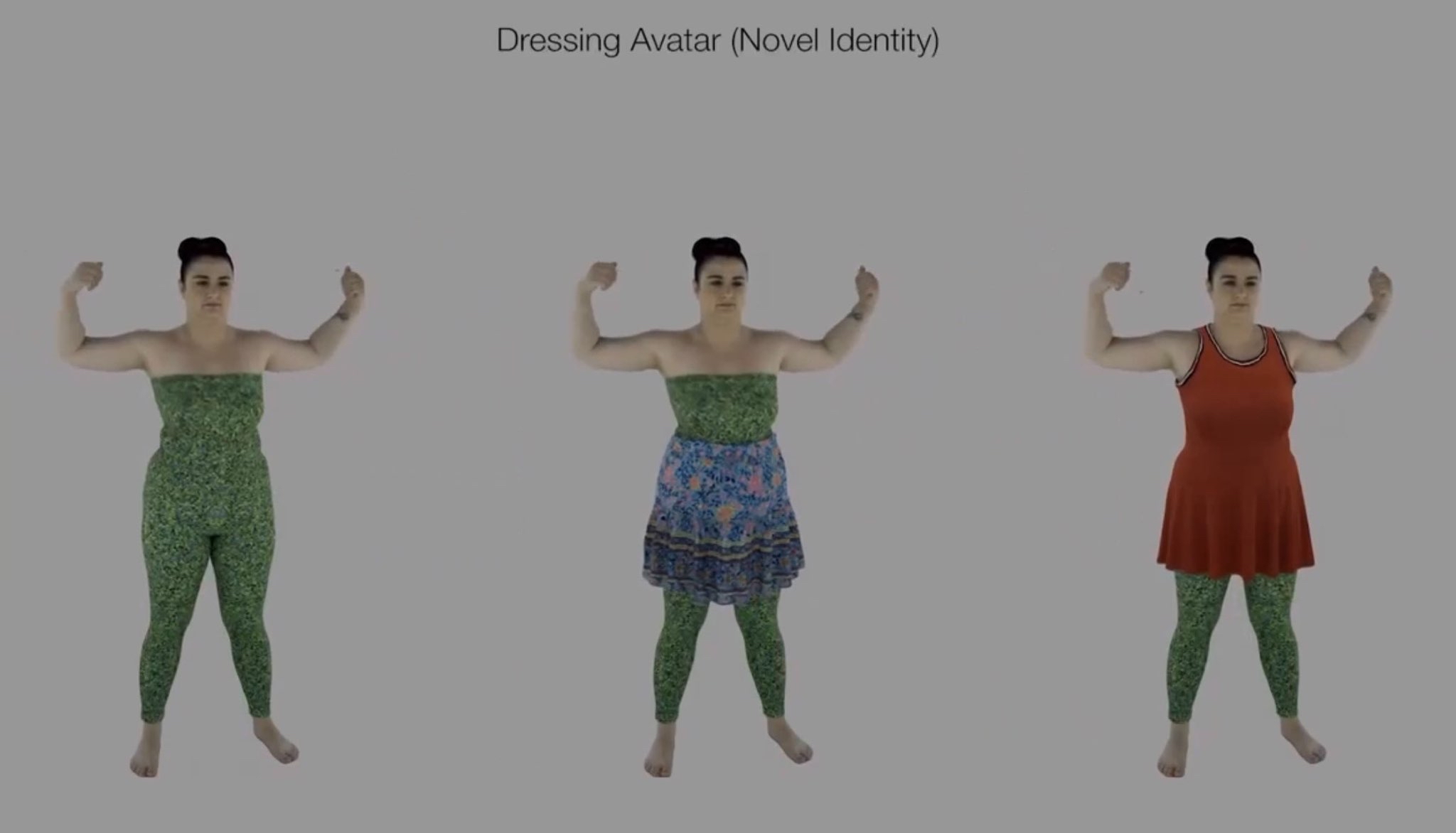

Animated avatars have long become a part of our lives. But realistic modeling of closing animation still remained an open challenge.

On the one hand, modern physical modeling techniques can generate realistic clothing geometry at interactive speed. On the other hand, modeling a photorealistic appearance usually requires physical rendering, which is too expensive for interactive applications.

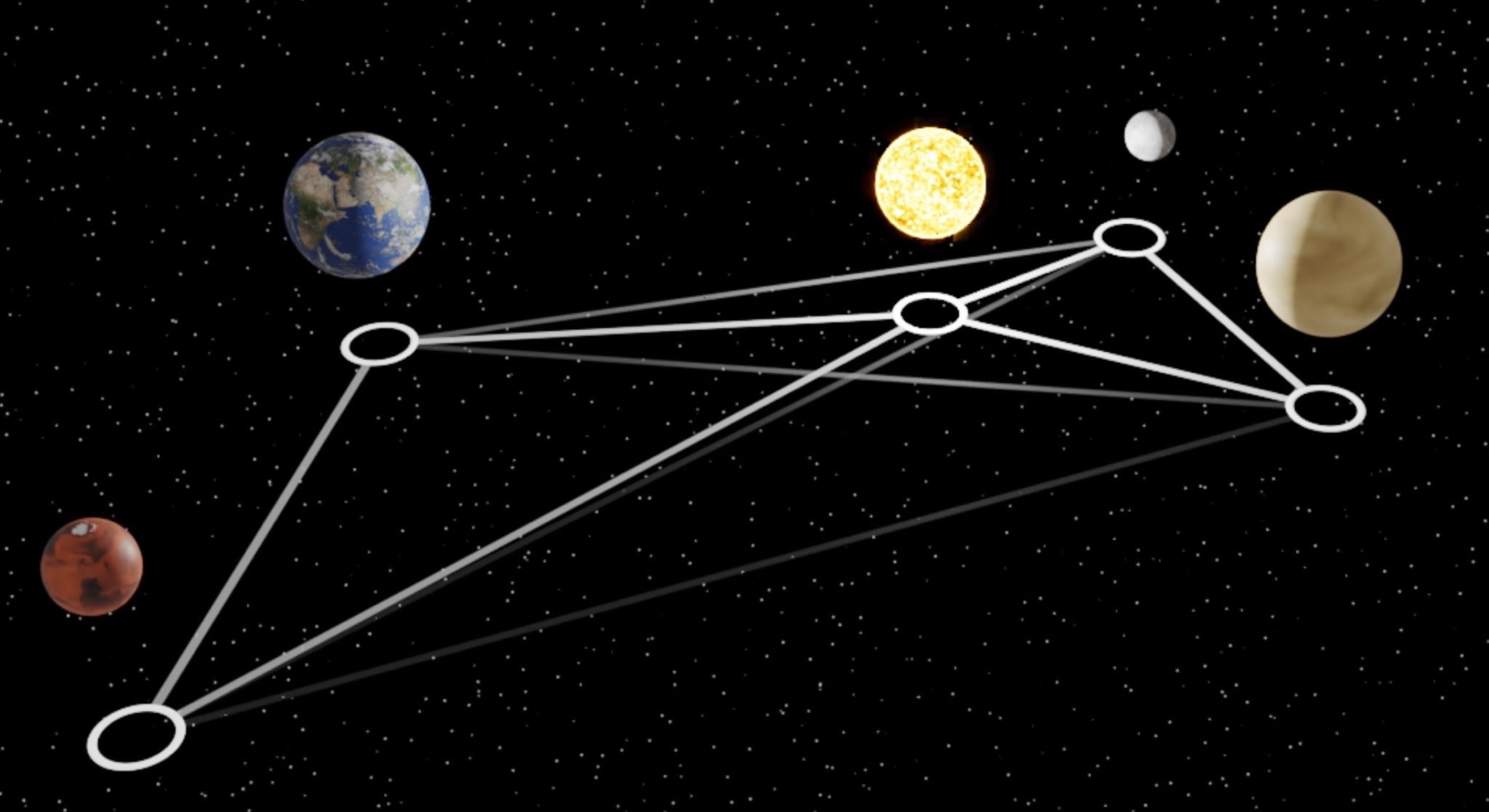

A group of scientists using machine learning "rediscovered" the law of universal gravitation.

To do this, they trained a "graph neural network" to simulate the dynamics of the Sun, planets and large moons of the solar system from 30 years of observations. Then they used symbolic regression to discover the analytical expression for the force law implicitly learned by the neural network.